IoT gives you real-world signals. Generative AI gives you language, reasoning, and multimodal synthesis. Put them together and you get systems that explain, predict, and act—not just log data. This guide shows you how to design a GenAI+IoT stack that is useful (grounded in sensors), safe (guardrails), and cost-smart (edge when it matters). You’ll learn architecture patterns, best practices, pitfalls to avoid, and where GenAI actually beats classical ML.

What & Why: Benefits, Risks, Trade-offs

What is Generative AI in IoT?

Generative AI (LLMs, diffusion, multimodal models) layered on IoT streams to produce summaries, root-cause narratives, code suggestions, images, or action plans from raw telemetry, logs, and maintenance docs.

Where it helps

- Human-in-the-loop operations: Turn noisy time-series + logs into plain-English explanations and step-by-step playbooks.

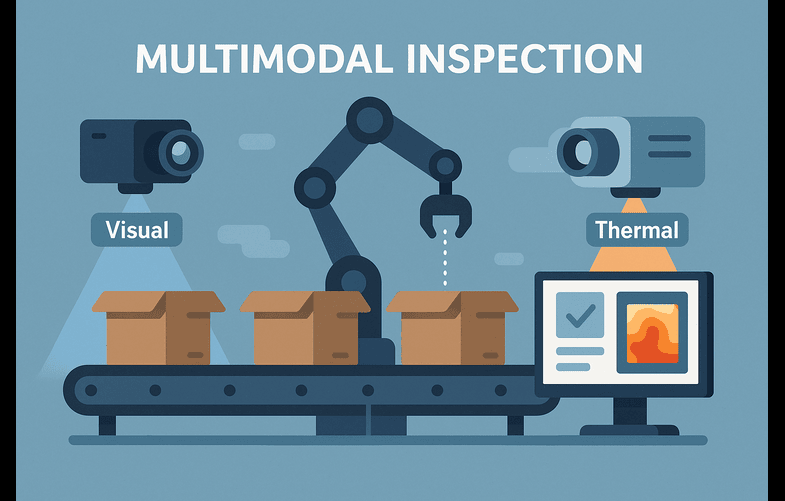

- Multimodal inspection: Combine camera + vibration + temperature to generate rich anomaly reports with snapshots.

- Knowledge retrieval: Answer “why did line 3 stop?” by grounding an LLM in your SOPs, tickets, and sensor context.

- Faster onboarding: Conversational “copilots” for equipment and sites.

Key trade-offs

- Latency vs Accuracy: Edge inference is fast and private; cloud is heavier but flexible.

- Cost vs Coverage: Always-on large models are expensive; use distillation, quantization, and event-triggered inference.

- Creativity vs Control: GenAI can explain and synthesize, but must be constrained by sensor facts and domain rules to avoid hallucinations.

Risks to manage

- Hallucinations without retrieval/validation

- Data leakage via prompts/logs

- Model drift and silent performance decay

- Over-automation without kill-switches

Need a sanity check on your use case or costs? Contact us—we can review an architecture in one session.

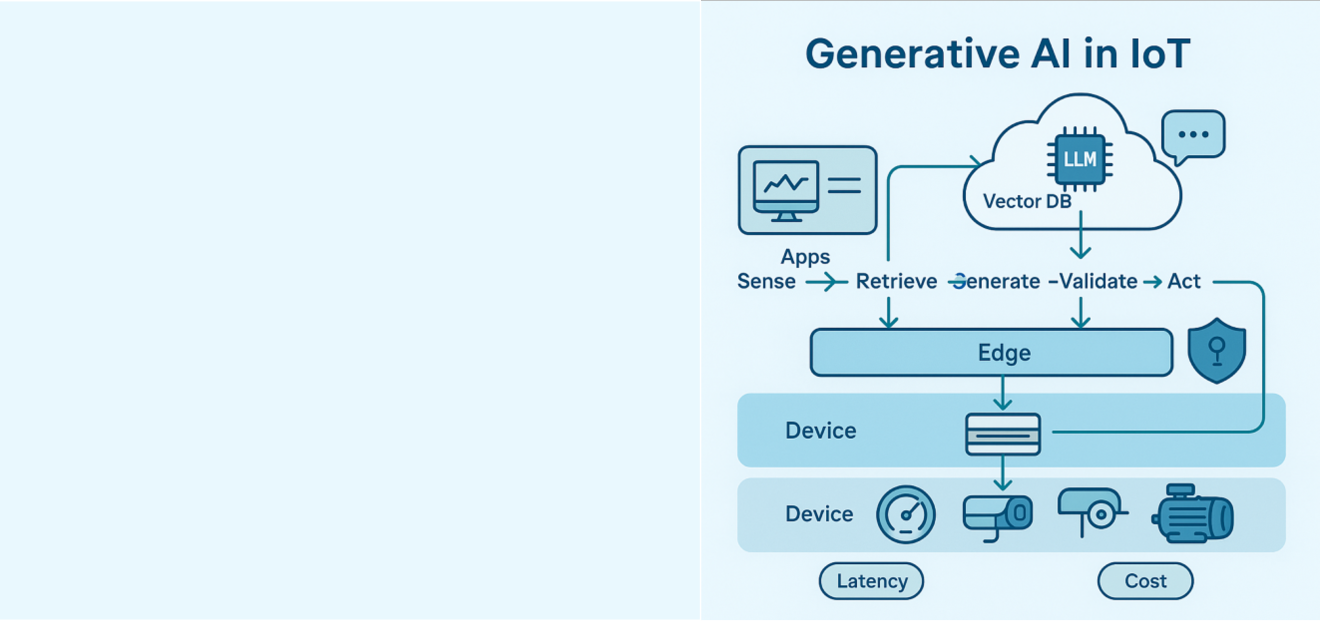

How It Works: A Mental Model & Reference Architecture

The Control-Loop-Aware GenAI Pattern

- Sense: Devices stream telemetry (MQTT/OPC UA/HTTP).

- Store & Index: Time-series DB (e.g., InfluxDB/Timescale) + object store for media. Create embeddings for manuals, SOPs, tickets—stored in a vector DB.

- Ground: When a question or alert arrives, retrieve relevant facts (sensor windows, SOP steps, past incidents).

- Generate: Pass a system prompt + retrieved context into a constrained LLM (function calling / JSON schema).

- Validate: Apply rules/thresholds, unit bounds, and anomaly checks.

- Act/Explain: Produce a report, work order, or API call. Always log provenance (which signals and docs were used).

- Learn: Capture feedback; update embeddings and prompts.

Textual Diagram (layers)

- Device/Edge: sensors, cameras, microcontrollers; optional tinyML for triggers.

- Gateway/Edge AI: stream filtering, on-device inference (quantized models), buffering during link loss.

- Cloud/Core: ingestion bus, time-series DB, object store, vector DB + RAG, policy engine, LLM service, MLOps.

- Apps: dashboards, Copilot chat, CMMS/ERP connectors, alerting.

Prompting & Guardrails

- System prompts: define tone, units, safety, and output schema.

- RAG (Retrieval-Augmented Generation): fetch SOPs and recent sensor windows to ground outputs.

- Function calling: force structured JSON for downstream automation.

- Validators: unit ranges, rate-of-change limits, device state checks.

Best Practices & Common Pitfalls (Checklist)

Design

- Define events that justify GenAI (e.g., repeated alarms) before picking models.

- Keep a minimal viable loop: retrieve → generate → validate → log.

- Prefer JSON outputs with schemas for automation.

Data

- Maintain a feature store for sensor stats (z-scores, FFT bands).

- Normalize units, time zones, and device metadata.

- Build red-team prompts to stress test hallucinations.

Edge/Cloud

- Use event-triggered inference; avoid constant polling with LLMs.

- Cache recent context at the edge to survive outages.

- Schedule OTA updates with rollbacks.

Security

- Sign firmware and enforce mutual TLS.

- PII minimization; redact prompts/logs.

- Separate control plane and data plane networks.

Ops

- Track latency, cost per event, answer quality KPIs.

- Add a human-override and escalation path.

- Document model cards and change logs.

Want a pre-flight checklist tailored to your plant or device? Contact us for a one-page diagnostic.

Performance, Cost & Security Considerations

Latency budgets

- Alarms & interlocks: target <200 ms at the edge; prefer classical models or tiny transformers.

- Operator assistants: 1–3 s acceptable with small/medium LLMs.

- Video: do detection/embedding at edge; send summaries upstream.

Cost levers

- Distill large models to smaller ones.

- Quantize to int8/4-bit for edge NPUs.

- Trigger generation only on anomalies, thresholds, or queries.

- Use RAG to cut tokens with precise context instead of giant prompts.

- Batch embedding jobs off-peak.

Security

- Firmware signing, secure boot, HSM-backed keys.

- Least-privilege for device identities; rotate certs.

- Prompt and output PII redaction; tenant isolation.

- Safety filters and allow-lists on function calls that can affect actuators.

Real-World Use Cases (Mini Case Studies)

- Predictive Maintenance Copilot (Manufacturing)

- Signal: vibration + temperature spikes.

- Gen step: retrieve similar incidents + SOP.

- Output: step-by-step fix with expected tools/time; generate a CMMS ticket in JSON.

- Why GenAI: Converts patterns into human-readable instructions that reduce mean time to repair.

- Energy Optimization (Buildings)

- Signal: occupancy + HVAC power + weather forecast.

- Gen step: propose setpoint schedule, explain comfort impact.

- Edge note: apply changes with guardrails (±2°C max).

- Why GenAI: Balances comfort narratives with numeric constraints.

- Field Maintenance Assistant (Utilities)

- Signal: meter telemetry + photos from technician.

- Gen step: multimodal report that ties image evidence to sensor anomalies.

- Output: structured JSON for billing/repair.

Want a second opinion on your GenAI+IoT plan—or a blueprint you can hand to engineering? Contact us for a fast architecture review and a cost model you can defend.

FAQs

What is Generative AI in IoT?

Using LLMs/multimodal models to summarize, explain, and orchestrate actions from sensor data and domain knowledge.

How is it different from predictive analytics?

Predictive models quantify (probabilities, anomalies). GenAI communicates and coordinates (explanations, steps, tickets) grounded in that data.

Edge vs Cloud—how to choose?

- Edge: tight latency, privacy, unreliable links.

- Cloud: heavy models, global context, lower on-device complexity. Many systems use a hybrid.

How to reduce hallucinations?

RAG with sensor windows + SOPs, function-calling with schemas, and validators on units/limits.

Which protocols work best?

MQTT for IoT scale; OPC UA when you need industrial semantics and existing PLC integrations.

What data do I need?

At least labeled incidents, recent sensor windows, and curated documents (manuals, tickets, SOPs) for retrieval.

Quick How-To: Pilot in 4 Weeks

- Pick one event (e.g., “Repeated over-temp on Pump A”).

- Assemble context: last 24h signals, SOPs, top 10 past tickets.

- Build flow: user question or alert → retrieve → generate JSON plan → validate.

- Run shadow mode: compare GenAI outputs to human responses.

- Measure: latency, cost/event, operator satisfaction.

- Harden: add red-team prompts, rollbacks, and OTA update plan.